o11y-weekly

2023-11-16 #5 Meet Vector

This week, Vector.dev from DataDog is deeply analyzed.

History

- 2018 : vector is born and written in rust.

- 2021 : DataDog acquires Vector and Timber technologies

Since vector has been acquired by DataDog, it turns out that vector is more focused on gateway than agent to be used as a pipeline (Directed Acyclic Graph: DAG)

License

When to use it ?

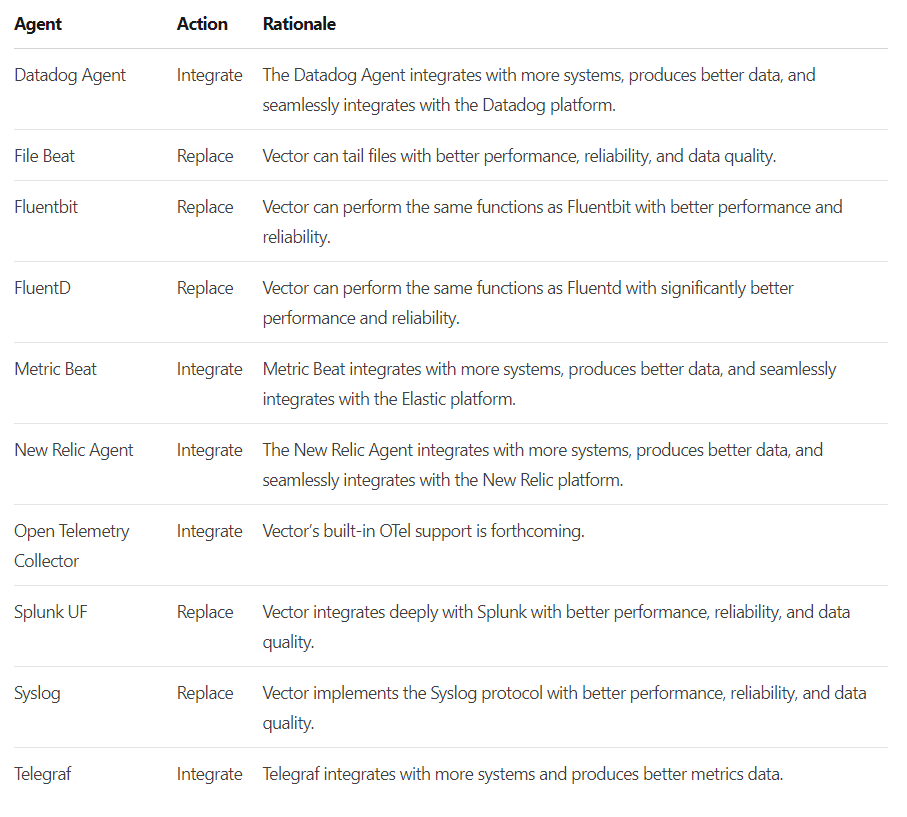

Vector can replace several agent on the server

-

https://vector.dev/docs/setup/going-to-prod/architecting/#1-use-the-best-tool-for-the-job

-

https://vector.dev/docs/setup/going-to-prod/architecting/#choosing-agents

Concept

Component: source or transform or sink, are components of the pipeline (this notion is largely used when monitoring vector per component id)

Vector supports multiple sources and sinks like logstash, prometheus, files, loki, elastic, …

One of the big feature of vector is the transforms component.

Transforms

Transforms is a Directed Acyclic Graph (DAG) oriented solution and helps transforming telemetry data to add more tags, filtering or simply transforming signal to another one like log to metric.

Transforms can be configured through Vector Remapping Language (VRL), dedup, filter, aggregate, …

Vector Remapping language

Vector is written in rust and the VRL module is available on crates.io

OpenObserve took the opportunity to reuse subpart of vector to build a different backend than DataDog which is very interesting and can be analyzed deeper through another post.

Pipeline Graph

Vector CLI has a graph command which is pretty handy to visualize the pipeline. In DataDog, it is possible to see the telemetry over the graph for instance.

Here is the vector log_2_metric pipeline graph for the next week vector demo:

Configuration

It is possible to use json, toml or cue file formats.

The configuration can be splitted like this way as example:

- vector.toml : global configuration

- sinks.toml: all sinks configuration

- XXX.toml: one file per pipeline and test

- dlq.tml: one config file for the dead letter queue configuration

Data directory

Vector is a statefull component depending the source transforms and sink used. A data directory is used to store the vector state

The file source uses the data directory to store checkpoint positions.

I/O Telemetry Compatibility

sources (input) and sinks (output)

| Protocol | Input | Output |

|---|---|---|

| OTLP metric | ||

| OTLP log | X | |

| OTLP trace | ||

| vector | X | X |

| datadog | X | X |

One of the strange thing is that many DataDog competitors are available as sinks but OTLP is not fully supported as input and no OTLP sink is available which is a blocker for using vector as gateway with OTLP.

Error Handling

VRL is a fail-safe language meaning that errors should be treated and are statically verified during the vrl compilation and vector startup.

This is a great feature, all possible runtime errors have been forwarded to a compiler upfront. It is hard to have an unmanaged parsing failure. The returned value is a result and errors should be treated and verified.

. |= parse_key_value!(.message, field_delimiter:"\t", accept_standalone_key:false)

.timestamp = parse_timestamp!(.t, "%Y-%m-%dT%H:%M:%S%.f%:z")

.job = "vector"

del(.message)

.message is the raw message which can be parsed.

1️⃣ parse message as key value and put the object at root level (dot) .. The parse_key_value returns a result which can be the object or an error. The bang ! operator is used to fail on error.

2️⃣ Add a field timestamp by parsing and fail if there is an error

3️⃣ Add job field to vector

4️⃣ Remove message to avoid paying twice raw and structured signal.

⚠️ This program will fail on error meaning that vector will stop on error.

It is also possible to handle errors in vrl but it comes at repeating the same error handling every time.

Instead of failing vector on error, it is also possible and recommended to drop on error so that vector will not fail at all and the message is not lost but rerouted.

[transforms.applog_file]

type = "remap"

inputs = ["applog_file_raw"]

file = "config/vrl/keyvalue.vrl"

# forward to a dead letter queue on error or abort

drop_on_error = true

drop_on_abort = true

reroute_dropped = true

All dropped messages are available with the .dropped input suffix.

Here is the associated loki sink to forward all dropped messages.

## dead letter queue for dropped messages

[sinks.dlq_loki]

type = "loki"

endpoint = "http://loki:3100"

inputs = ["*.dropped"]

encoding = { codec = "json" }

labels = { application = "dead-letter-queue", host="", pid="" }

TDD

One of the concept of vector is the ability to test a transformation pipeline.

It becomes really easy to fix a breaking change during a parsing failure.

This command runs vector inside docker to test the pipeline

docker run --rm -w /vector -v $(pwd):/vector/config/ timberio/vector:0.34.0-debian test --config-toml /vector/config/**/*.toml

A tranform test example where assertions can also be written in vrl.

[[tests]]

name = "Test applog_file parsing"

[[tests.inputs]]

insert_at = "applog_file"

type = "log"

[tests.inputs.log_fields]

message = "t=2023-11-13T15:53:37.728584030+01:00\th=FR-LT-00410\tH=6666\tT=5663"

[[tests.outputs]]

extract_from = "applog_file"

[[tests.outputs.conditions]]

type = "vrl"

source = '''

assert!(is_timestamp(.timestamp))

'''

Monitoring Vector

Vector is really well instrumented and grafana dashboards are available to monitor it properly.

The Vector Monitoring dashboard exposes all the telemetry data available while depending the integration, only a subpart is really useful.

Vector as Node exporter (host metrics)

Vector as a host_metric source which can be a node exporter drop-in solution.

The Node exporter dashboard for vector is a compatible node exporter dashboard for vector host_metric.

Agent communication

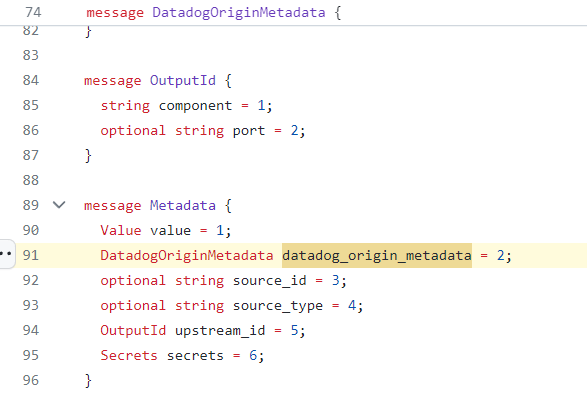

Agent communication is an important topic to understand when talking about observability agent. Vector uses its own gRPC protocol between agents/gateways.

- vector event model proto

- vector service proto (push model with EventWrapper) vector spec vs otlp proto spec

Instead of using an external standard, vector has a internal protocol which can create incompatibility and OTLP integration issues.

It seems that logs and metrics are really well integrated from the transformation point of view but tracing has a limited support and only available for datadog since the only trace sink available is the datadog traces sink.

Again, 3 protocols has to be synced (vector, datadog and OTLP) which is a complex problem when there is alignment issues.

The vector protocol contains specific DataDog metadata which can be strange from the standard point of view.

The context history can help to understand the trade-off. It seems that the vector team did not use or see the benefits of using opentracing or opentelemetry to serialize telemetry to disk.

- 2015/11: opentracing spec first commit

- 2017/01: opencensus instrumentaion first commit

- 2018/09: vector initial commit

- 2019/05: opencensus jaeger tracing, opentracing merged to opentelemetry spec + instrumentation

- 2019/02: birth of vector protocol which is the main communication protocol between agents and gateway at the time of writing.

- 2021 : Datadog acquires vector

Internally, DataDog has built a UI over vector + aggregated telemetry over vector graphs

Conclusion

Vector is best at pipeline transformation like log to metric transformation / aggregation while it does not support nor full OTLP and traces. Vector uses its own protocol which can conflict with OTLP (ie: OTLP trace).

Its compatibility outside DataDog is limited to log and metric

How about a true opentelemetry based vector ?

Strength

- Safe error handling

- Testability

- Documentation

- Resiliency

- Data Durability see buffer

Weakness

- OTLP support / Vector <> OTLP conversion and alignment issues : partial OTLP support

- Datadog vendor locking : vector protocol DataDog leak

What next ?

Next week, a full vector hands-on will be available to see its strength